A Closer Look – MIT Lab’s 8D display

3D NEWS> GLASSES FREE 3D

The clever folks at MIT Media Lab have created a prototype screen that behaves just like a window.

The 8D Display presents 3D scenes without glasses and, as the viewer moves their head, the perspective is adjusted, both horizontally and vertically, without the need to wear tracking devices.

What is most unique however is its real-time relighting capability. That is, if you were to shine a torch into the screen, the virtual model within the scene would be illuminated from the direction of the real light source. Put your finger in front of the torch, and you will even see a shadow of your finger occluding the object. The lighting effects are rendered on the virtual object in real-time and the object itself does not need to be static.

The "8D Display" both captures and presents the scene, alternating rapidly between both modes so the viewer can interact and see the scene at the same time. The goal is to inspire novel 3D interfaces, in which users interact with digital content using light widgets, physical objects, and gesture.

It is the latest iteration of the BiDi screen – a MIT Media Lab bi-directional display project originally developed in 2009. The goal of BiDi was to solve the problem that existing resistive and capacitive displays can only sense interaction when a user touches the surface.

BiDi was designed to enable multi-touch screen interaction AND off-screen 3D gesture, without the need for the user to wear tracking equipment. It was inspired by a series of panels from manufacturers such as Sharp and Planar, which incorporate a light sensing optical array of pixels within the LCD screen. When a user touches the screen, light is prevented reaching the corresponding sensors and the CPU can register the location of the touch. The problem is, as soon as the finger is moved away from the screen, it becomes out of focus to the light sensors and no location can be registered.

MIT proved that, by slightly displacing the optical sensor array from the LCD by about 2.5cm, the LCD can be used to modulate the light reaching the light sensitive optics so depth as well as position can be registered. How?

BiDi Screen from MIT Media Lab (Bidirectional Screen)

When the LCD screen is in capture model, the backlight is switched off and the LCD panel displays a pinhole array. A pinhole array/ array of transparent apertures on the screen is basically a series of hundreds of dots that light can pass through to a sensor behind it.

Whatever object is placed in front of the LCD screen in this mode (such as a finger), the image is picked up by the optical sensor behind it as hundreds of tiny low resolution images (each pinhole on the LCD display acts as a tiny lens). Each image of the object is slightly different as each pinhole allows the light to pass from the object at a slightly different angle and therefore perspective. These hundreds of tiny low res images can be turned into a 4D light field image, which are segmented into individual depth planes. The differences are analysed, and depth can be calculated. The result is accurate off screen gesture. A user can manipulate and interact with virtual objects by touching the screen (to select an item for example) but then change the scale by moving their hand towards and away from the screen.

NEVER MISS A GLASSES FREE 3D STORY. GET FREE WEEKLY EMAIL NEWS VIA RIGHT SIGN UP BOX

To display the scene at the same time, the LCD rapidly switched between pinhole mode (back light off) and display mode (backlight on).

While the 8D display is attempting to achieve the same input effect as the BiDi screen, the mechanics are a little different. Rather than use a pinhole aperture, the 4D light field is captured using tiny lenses, which capture more light. The 8D display also delivers a glasses free 3D output with horizontal and vertical parallax when the viewer moves his or hers viewpoint.

The simultaneous real-time 4D lighting and 4D capture (hence 8D) is only feasible for the first time and to achieve real-time rendering and decoding of light fields, MIT Media Lab had to develop a new 8D GPU pipeline.

In the future, human computer interaction (HCI) could be simplified and become more intuitive using this technology. Medical applications are obvious, for example, using different intensities of light to go deeper and deeper into the anatomical layers of a virtual body such as the venous or skeletal structure.

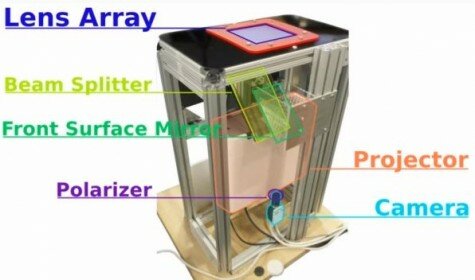

However, the current prototype is very bulky, requiring a projector, camera, beamsplitter mirror. At the time the team built the BiDi screen, SIP (sensor-in-pixel) LCDs were not commercially available, and when building the 8D display, they were not of sufficient resolution to achieve the effects we wanted. So in both cases MIT opted to simulate the SIP LCD using an equivalent optical system which, in the case of the 8D Display involves a projector and a camera, which both share a view of the screen through a beamsplitter.

A lot of the techniques proposed are being improvised with large products. To be commercialised, the size will need to be reduced but this is highly possible according to MIT.

“Much of the potential impact of this project is predicated on the existence of Sensor-In-Pixel (SIP) Liquid Crystal Displays (LCDs). In recent years LCD manufacturers have begun to introduce a variety of semiconductor technologies that combine light sensitive elements into the driver matrix for typical liquid crystal displays [1]. While our real-time prototype was certainly enabled by high-end computer graphics hardware, the optical configuration of the presented camera and projector implementation is somewhat unremarkable. However, in combination with collocated, thin, optical capture and display elements, such as those provided by a SIP LCD, this work suggests a straightforward route to achieving a thin, low-cost, commercially realizable, real-time, 8D display.

The 8D display is just of several inventions to overcome the prohibitive bandwidth requirements of holographic displays, all without the need for eyeglasses, using optimized optical hardware and co-designed "compressive" image-encoding algorithms. To date, MIT have explored the use of such "compressive displays" that are primarily composed of multiple layers of high-speed liquid crystal displays (LCDs).

One such a project is called the Tensor display which uses several layers of LCD panels to generate a holographic effect. Each image shows a different perspective and is refreshed at a high speed of 240 hertz. The viewer’s brain adds the frames together to create a single coherent image although existing technology falls short of the minimum 360 hertz required for a convincing effect. MIT have been working on the creation of natural 3D images through a multiple layered approach for a while. Increasing the layers increases the sharpness and contrast although that would also increase the size of the display. The quality is almost the same as still holograms and multiple viewers can look around the scene both vertically and horizontally.

For more information about the 8D Display click here.

For more information about MIT Media Lab's compressive displays click here.

FREE WEEKLY 3D NEWS BULLETIN –